Discover what is compiler? really are and learn how to build one from scratch in C++. This hands-on series is perfect for beginners who want to master compiler fundamentals step-by-step.

Introduction

Ever wondered what happens between writing code and seeing it run? Let’s demystify the process — by building our own compiler from scratch. You’ve probably written code in languages like C++, Python, or JavaScript. You hit “Run” or “Build”… and the program works. But have you ever stopped to ask:

What’s actually happening behind the scenes?

How does a computer understand your high-level logic? What turns your code into something your machine can execute? The answer lies in a powerful, complex, and often mysterious piece of software: the compiler.

In this blog series, we’ll not only explain how compilers work — we’ll build one from scratch in C++. Don’t worry if you’re new to compiler theory — we’ll break everything down step by step, making it beginner-friendly yet technically sound.

What is a Compiler?

At its core, a compiler is a program that translates code written by humans into code understood by machines. You might’ve heard that before — but let’s break it down in layers, so you don’t just remember it, you understand it.

Humans Speak Languages. So Do Computers.

When you write:

int a = 5;You’re using a high-level language — one that’s readable, expressive, and close to human thinking. But computers don’t understand C++, Java, or Python directly. They only understand instructions like:

MOV EAX, 5Or worse:

10111000 00000101That’s machine code — the native language of your CPU. It’s fast, brutally efficient, and unreadable to most of us. A compiler is the bridge between the two. It transforms your logic and intentions into something your hardware can execute.

Compilation Is Translation, But Also More

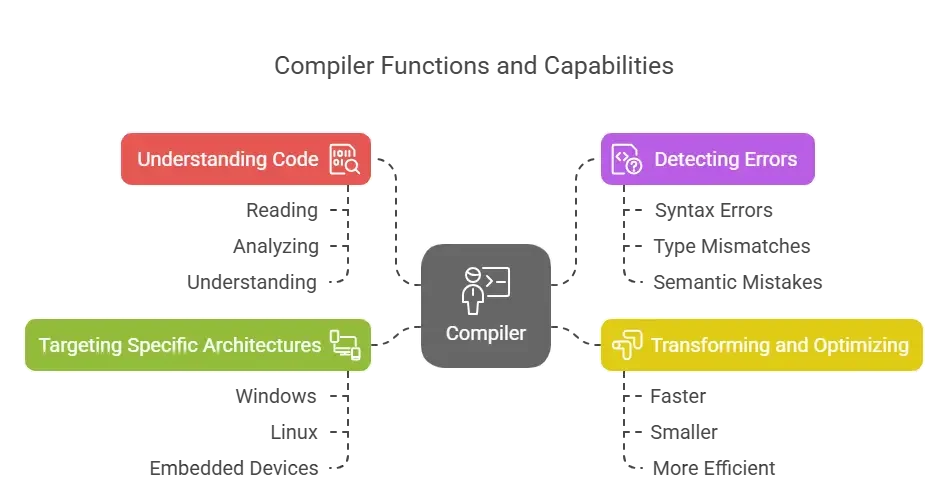

It’s tempting to think that compilers just “translate” code. But they do much more than that. Here’s what really happens:

- Understanding Your Code: A compiler doesn’t blindly convert line-by-line. It actually reads, analyzes, and understands the entire structure of your code.

- Detecting Errors: It checks for syntax errors (like missing ;), type mismatches (e.g., adding a number to a string), and even semantic mistakes (like using an undeclared variable).

- Transforming and Optimizing: A compiler often restructures your code internally to make it faster, smaller, or more efficient — even without changing what it does.

- Targeting Specific Architectures: A good compiler can take the same high-level code and compile it for Windows, Linux, or even embedded devices — just by changing the backend.

So… Is a Compiler Just One Program?

Not quite. Think of a compiler as a pipeline of programs, each with a specific job. For example:

- One part scans your code for tokens.

- Another builds a tree to represent your program.

- Another checks your types.

- Yet another generates intermediate code.

- And finally, the backend spits out machine code or an executable.

Each of these stages is a layer of abstraction, and as we’ll see throughout this series, we’ll build many of these layers ourselves.

But Wait, What Interpreters Do?

Yes — and no. Both compilers and interpreters “run” your code in some way, but the approach is very different:

Compiler | Interpreter | |

How | Translates the whole program in advance | Executes line-by-line at runtime |

Speed | Fast execution (after compilation) | Slower (no precompiled code) |

Output | Machine code or executable | No separate output — direct execution |

Examples | C, C++, Go, Rust | Python, JavaScript, Ruby |

But real life is messy — many languages today blur the lines:

- Java is compiled to bytecode by the javac compiler. This bytecode is then executed by the Java Virtual Machine (JVM), which may interpret it or apply Just-In-Time (JIT) compilation to speed up execution.

- Python source code is first compiled into Python bytecode (.pyc files), which is then interpreted by the CPython virtual machine.

- Modern JavaScript engines like Google’s V8 (used in Chrome and Node.js) start by interpreting JavaScript, then apply JIT compilation to frequently-used code paths for performance.

We’ll be focusing on Ahead-of-Time (AOT) compilation in this series — compiling your code fully into a native executable before it runs. You might not know what JIT or AOT mean yet — and that’s okay. We’ll explore both of them later in the series with more examples and real-world context.

What Does a Compiler Actually Produce?

Depending on its design and target environment, a compiler can generate:

- Assembly code – human-readable, low-level instructions for a specific architecture (e.g., x86, ARM).

- Bytecode – platform-independent intermediate representation, used by virtual machines (e.g., JVM for Java, CLR for C#).

- Machine code – raw binary instructions executed directly by the CPU. This is what actually runs on your device.

- Object files or executables – compiled outputs like .o, .obj, .exe, .out, or .bin that can be linked or run.

For Flare, our goal is to go all the way — from .flare source files to a real executable binary using LLVM under the hood.

Modern Compilers Are Everywhere

Compilers aren’t just for programming languages like C or Rust — they’re all around us:

- Web browsers run compiled and optimized JavaScript, often using JIT and AOT hybrids.

- Game engines use compiled shaders (written in GLSL/HLSL) to run efficiently on GPUs.

- AI frameworks like TensorFlow and PyTorch use compiler toolchains (e.g., XLA, TVM) to optimize model execution across CPUs, GPUs, and TPUs.

- Mobile and desktop apps are compiled to native code for different platforms and devices.

- Even languages like TypeScript, which compiles to JavaScript, rely on compilers to add structure and catch errors early.

Whether it’s Rust, C++, Swift, Kotlin, or even TypeScript, compilers are the invisible engines turning human-readable code into blazing-fast, portable, and optimized software.

The Compiler Pipeline: How Code Becomes Executable

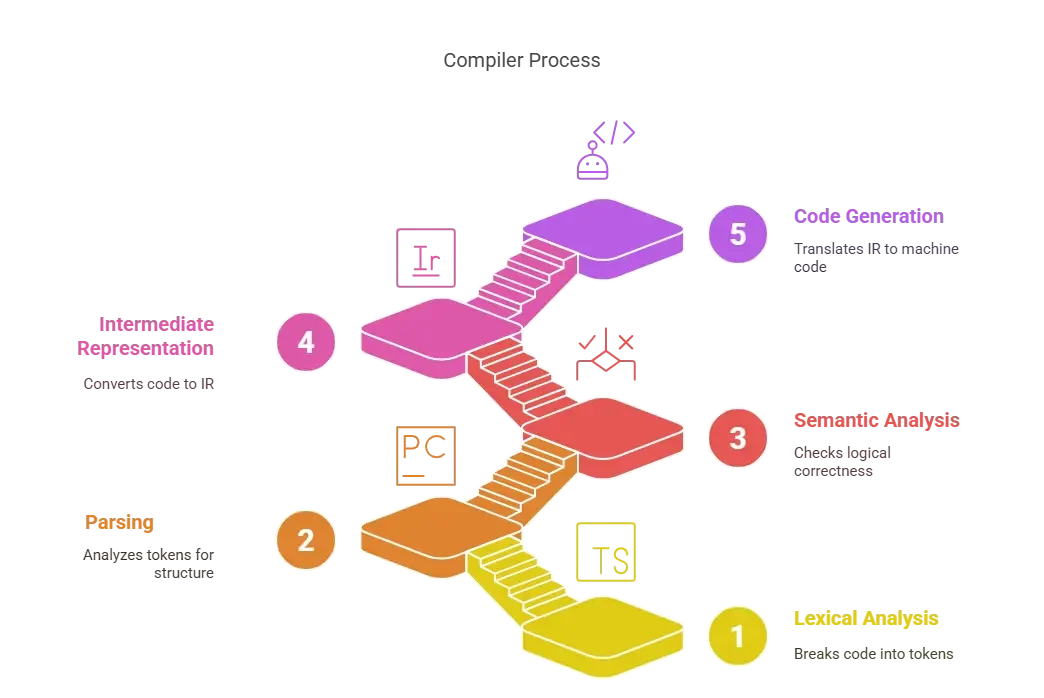

A compiler isn’t a single, monolithic system. It’s made up of well-defined stages, each handling a specific task. Let’s explore these stages. (We’ll also be building each of them as we create our compiler.)

1. Lexical Analysis (Tokenization)

Breaks the raw source code into tokens — keywords, identifiers, symbols, etc.

Example: if (x == 1) becomes [“if”, “(“, “x”, “==”, “1”, “)”]

2. Parsing

Analyzes token sequences for grammatical structure. Checks if the code is syntactically correct and builds a parse tree.

3. Semantic Analysis

Checks for logical correctness — are variables declared? Do types match? Are functions called correctly?

4. Intermediate Representation (IR)

The code is converted into a platform-independent intermediate format.

We’ll be using LLVM IR — a widely adopted system for code generation and optimization.

5. Code Generation and Optimization

Translates IR into machine code. This is where you get an actual .exe, .out, or binary.

Optimizations like constant folding or dead code elimination happen here too.

What Makes Compiler Projects So Powerful?

Building a compiler from scratch is one of the most enriching challenges in computer science. It brings together deep theoretical knowledge and practical engineering. You don’t just learn how programming languages work — you internalize core concepts like grammars, parsing, memory models, and type systems. You’ll apply data structures like trees and graphs, leverage recursion and finite-state machines, and develop a strong understanding of how high-level code turns into machine instructions. On top of that, it’s a standout portfolio project. Saying “I built a compiler” signals mastery, initiative, and technical depth. And let’s be honest — it’s just plain fun. You get to create a programming language and bring it to life.

From Language Design to Executables: Your Compiler Roadmap

To make this series practical and exciting, we won’t just explain concepts — we’ll build a real compiler from scratch. Along the way, we’ll also design a simple, beginner-friendly programming language. We’ve named it Flare, and we recommend you follow along by evolving your own language design too — defining the syntax, rules, and features based on your preferences.

This hands-on approach will help you learn by doing. Here’s what you’ll gain from the series:

- Designing a custom language from the ground up

- Writing a lexer using regular expressions

- Parsing source code with Bison

- Building Abstract Syntax Trees (ASTs)

- Performing semantic analysis

- Generating LLVM Intermediate Representation (IR)

- Compiling to real executable files — like a modern compiler

Everything will be implemented in C++, using modern tooling and best practices — with plenty of code examples and explanations tailored for clarity.

Blog Series Roadmap

Here’s the journey we’ll take together:

- Intro to Compilers (this post)

- Designing the Flare Language

- Lexical Analysis

- Parsing: Building a Parser with Bison

- Abstract Syntax Tree (AST) vs Parse Tree

- Semantic Analysis

- Code Generation with LLVM

- Wrap-up: Building an End-to-End Compiler

Each blog post is designed to be beginner-friendly, but packed with real knowledge — the kind that can serve you whether you’re a student, a developer, or just someone curious about how compilers work.

Wrapping Up: Let’s Write Code That Compiles Code

You don’t need a PhD in compilers or years of experience in language theory to follow this series. If you know some C++ and you’re willing to tinker, you’re more than ready.

This isn’t just about learning how compilers work — it’s about building one, step by step. You’ll walk away not just with knowledge, but with a working compiler you can call your own.

Let’s dive in — and bring a new programming language into the world, one line of code at a time.

Next up: [Designing Your Own Programming Language – Meet Flare]